Key Takeaways:

- AI demand for electricity is outpacing power grid capacity.

- The future of data centers lies in maximizing existing power supplies and eliminating inefficiencies in energy delivery.

- Claros is rethinking data center architecture—from chip to grid—to unlock smarter, more scalable power infrastructure.

Power delivery inefficiency is not just a grid problem, it’s also a rack-to-load problem. To address this issue, we have to look beyond the grid itself to the “last mile” of power delivery, where rack-to-load losses not only waste energy, but also significantly constrain compute density and performance.

Mapping Rack-to-Load Losses

In “The Power Bottleneck: What’s Limiting Data Center Growth?”, we discussed the limited capacity of existing grids to keep up with the growing energy demands fueled by AI-driven data centers. The blog post highlighted the urgent need to optimize electricity consumption at data centers and called for smarter, more efficient, and scalable energy systems to support the future of AI infrastructure, an imperative we should all be working toward. But to achieve this, we should first understand the mechanics of power delivery and loss.

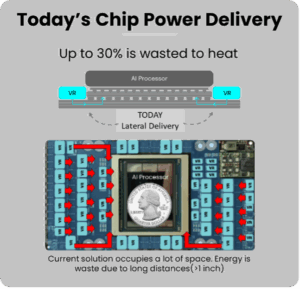

Once electricity enters a data center rack, a significant amount of energy is lost before it ever reaches processors. These losses occur as power is stepped down from 48V or 12V to the board-level voltage regulator modules (VRMs) that supply the xPU, a term that encompasses a range of specialized processing units—such as the graphics processing unit (GPU), central processing unit (CPU), and tensor processing unit (TPU)—designed to handle specific workloads more efficiently.

Traditional lateral-power-delivery methods rely on electrically long, high-current paths across printed circuit boards (PCBs). Because these paths operate at low voltage, they require high current, which generates excess heat, further reducing efficiency and limiting how densely compute resources can be packed. Both inefficiencies together account for up to ~30% of energy wasted to heat.

To meet the growing demands of AI workloads, reimagining power delivery architecture is essential. Reducing these inefficiencies will not only save energy; it will also unlock higher performance and greater compute density at data centers.

Architectural Options for Improvement

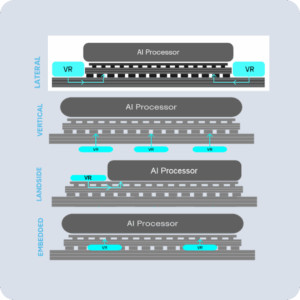

As AI workloads continue to scale, so must the efficiency and sophistication of power delivery architectures. Emerging alternatives to lateral–power-delivery, like backside, landside, and embedded power delivery, are promising alternatives that the industry is embracing. Each presents its own opportunities and disadvantages.

Backside Power Delivery (Vertical)

The backside approach uses through-silicon vias (TSVs) and backside interposers to deliver power more directly to the die, which significantly reduces the distance electricity must travel, enabling higher current densities and improving overall efficiency.

Landside delivery, which routes power through the top of the chip, can be attractive for certain applications but may not be ideal for large compute dies as it competes for valuable surface area that would otherwise be used for logic.

Embedded Power Delivery

A particularly compelling innovation is embedded power delivery, where integrated voltage regulators (IVRs) are placed directly into the substrate or adjacent to the die. This architecture eliminates the need for long PCB traces and delivers power precisely where it’s needed—at the xPU. The benefits are substantial: reduced I²R losses, higher current densities, support for advanced power management systems, and freed-up real estate on the board. This also simplifies the overall power delivery network, making it easier to scale and manage. However, the challenge currently facing industry and academia is the associated thermal management with such a stack–up associated with thermal extraction as well as potential thermal plane interactions between the Voltage Regulator(s) and the xPU(s).

Claros’s Solution to the Problem

Claros’s IVR product focuses on backside power delivery, which lays the foundation for more efficient and scalable AI systems. Future products will move toward substrate-embedded power–the holy grail of energy delivery—allowing power management components to be built directly into the layers of each chip’s substrate. This results: in less wasted energy and improved reliability. With our innovative approach to achieving true high density of power delivery we are focused to deliver scalable solutions for next generational high compute loads that exhibit a desire for compact no wasted space for integration.

Beyond Efficiency: Enabling Performance Gains

While energy efficiency is a critical driver, the value of advanced power delivery architectures goes beyond just saving watts. For commercial products, especially those in AI and high-performance computing, performance per watt is a key differentiator. Embedded power solutions like IVRs enable faster, more responsive systems by minimizing voltage droop and improving transient response—factors that directly impact compute performance.

And although energy savings alone may not motivate everyone, we all care about performance. Whether you’re building AI models, running real-time inference, or managing massive data pipelines, the ability to pack more compute into a smaller footprint with better thermal and power efficiency translates into faster results, lower latency, and a competitive edge.

Delivering Smarter Power at the Point of Compute

At Claros, we believe the future of compute performance starts with rethinking how power is delivered and evolving beyond legacy architectures. Our solutions embody the principle of “Power Delivery, Evolved, Embedded,” a new standard for energy efficiency —from chip to meter— and performance in AI infrastructure.

Claros is designed to tackle not just wasted energy, but wasted potential. By embedding intelligence and precision into the power delivery path, we eliminate inefficiencies that limit compute density and throttle performance.

With a DC-native mindset and advanced integrated voltage regulation (IVR), Claros brings grid-to-load efficiency full circle, delivering power exactly where it’s needed, when it’s needed. This approach lays the foundation for sustainable, high-density compute, empowering data centers to scale intelligently by using every megawatt smarter, not just consuming more of them.

Contact us to explore how you can collaborate with Claros to fix the last mile of power delivery and be on the lookout for next month’s blog post, where we will explore how Claros is applying our efficiency and performance mindset to the full data center power chain. It’s an exciting step forward as Claros and industry partners prepare for the future of AI factories.